Startup focuses on reliable, efficient cooling for computer servers

In a dark, windy room on the top floor of Engineering Hall on the University of Wisconsin–Madison campus, racks of computers are processing information for a college that relies, like all technical fields, on massive computing power. The noise comes from multiple fans located inside each computer case and from the large air conditioner that drives currents through the room to remove waste heat from the processors.

Equipment and electricity for cooling are a major expense at big computer installations, and Timothy Shedd, an associate professor of mechanical engineering at UW–Madison, uses the room to show a system he has invented that can do the job more efficiently.

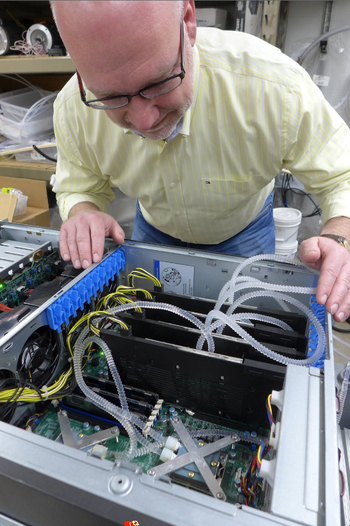

Timothy Shedd examines a computer equipped with his novel cooling system. Tubes circulate refrigerating fluid through a special heat exchanger (under the X-shaped structure) on the processor that is the biggest heat source in a computer.

Photo: David Tenenbaum

In Shedd’s system, a pair of translucent plastic tubes enter each computer case. Upon close inspection, a stream of tiny bubbles in the fluid can be seen exiting the case.

Those bubbles — a gas phase of the liquid refrigerant that entered the computer — are removing heat from a single computer. For several reasons, the system is, roughly speaking, 10 times more efficient than the air-conditioning that dominates the server field.

Shedd has spent more than a decade studying and designing computer cooling systems, and he has started a spinoff business called Ebullient to commercialize his invention, which is covered by patents he’s assigned to the Wisconsin Alumni Research Foundation.

Computing power degrades and then fails if chips get too hot, so cooling is a fundamental need in data centers — the warehouses full of racks of computers owned by Amazon, Google and lesser-known firms. Data centers are growing seven to 10 percent a year in the U.S., with the biggest growth in the Midwest, Shedd says.

Cooling costs at these “server farms” are about $2 billion a year, or about 10 percent of the capital cost, and just three companies make and install most of the big cooling systems, Shedd says. “Cooling can make up 50 percent of the annual operating cost, so the cost of cooling can quickly become larger than the capital cost of the computers themselves.”

Computing power degrades and then fails if chips get too hot, so cooling is a fundamental need in data centers.

Shedd’s technology has two key components: First, a plastic chamber attached to the processor absorbs heat at the point of creation. Second, a network of tubes and a pump carry the heat to the roof, where it is “dumped” to the atmosphere.

Although the system uses air conditioning refrigerant, it omits the compressor, condenser and evaporator — three major parts of the air conditioning used to cool server farms.

A few innovative computer-cooling systems use water to remove heat, Shedd says. And while water does transfer an immense amount of heat, it makes operators nervous. “If you get a few drops of water on a computer, you are done, so the companies selling that face an awful lot of resistance.”

Instead, Shedd chose a refrigerant that would not damage the computer if it spills. Because the refrigerant carries less heat than water, he must ensure that the fluid boils in the chamber atop the chip and condenses back to liquid on the rooftop heat disperser. This “phase change” ramps up the heat transfer rate without threatening the processor.

Shedd estimates that his system can cut cooling costs by up to 90 percent, but first he’s got to prove that it’s safe and reliable.

Already, his devices have been working for five months nonstop at a group of servers at the UW–Madison College of Engineering.

As server farms become even more numerous, their cooling requirements will only grow. Shedd estimates that his system can cut cooling costs by up to 90 percent, but first he’s got to prove that it’s safe and reliable. “In talks with the industry, they’ve stressed that over and over. Our system has a built-in redundancy — instead of one loop of coolant into each server, we have two, and we have duplicate pumps as well.”