Next generation Large Hadron Collider relies on UW–Madison computing

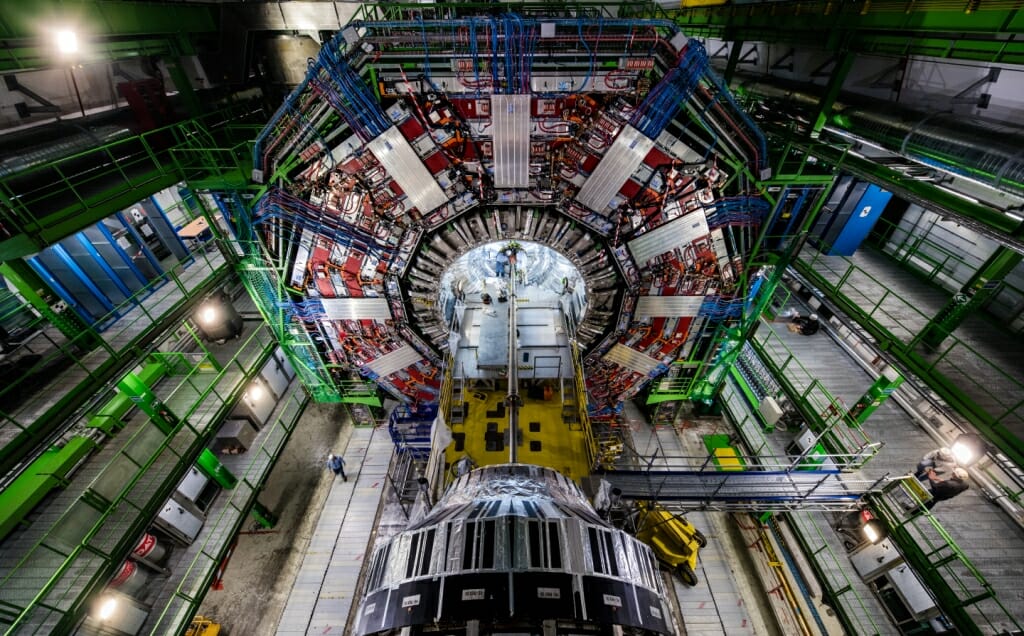

The Large Hadron Collider near Geneva, Switzerland, is in the process of an upgrade that will increase the number of particle collisions possible, generating a wealth of new data. UW–Madison will help create software to handle that data.

In July 2012, scientists at CERN, the European Organization for Nuclear Research in Geneva, Switzerland, announced the discovery of a fundamental particle critical to our understanding of the universe: the Higgs boson.

They had done it using the largest machine in the world, a powerful particle accelerator called the Large Hadron Collider. Today, the LHC is in the process of an upgrade called the High-Luminosity Large Hadron Collider. The new accelerator, which should be operational in 2026, will increase the number of particle collisions possible, generating a wealth of new data.

But with this massive trove of physics data comes the need to process it. With $25 million in funding, the National Science Foundation (NSF) just announced the launch of the new Institute for Research and Innovation in Software for High-Energy Physics (IRIS-HEP) to develop the innovative software to achieve this goal. The University of Wisconsin Center for High Throughput Computing (CHTC) will receive $2.2 million dollars in support of its efforts as part of IRIS-HEP.

“Even now, physicists just can’t store everything that the LHC produces,” said Bogdan Mihaila, the NSF program officer overseeing the award. “Sophisticated processing helps us decide what information to keep and analyze, but even those tools won’t be able to process all of the data we will see in 2026. We have to get smarter and step up our game. That is what the new software institute is about.”

Hundreds of thousands of computers comprise the HL-LHC’s global computing network. CHTC will address the challenges of integrating and deploying the required physics software and associated infrastructure. Located within the Computer Sciences department, CHTC has been the principal institution of the Open Science Grid (OSG) project, a consortium of partners in the U.S. that supports the Large Hadron Collider, among myriad other research projects across a spectrum of domains.

“Software integration is crucial in a project like this one, in which software development is distributed nationally and even internationally,” says Tim Cartwright, principal investigator for the UW–Madison arm of the OSG and its chief of staff.

The OSG grew out of the need to handle vast amounts of data coming from the field of high energy physics, and specifically to meet the needs associated with the LHC. It performs an array of high-throughput computing tasks, and the team at CHTC helps integrate, test, distribute and support the necessary software. Other duties of the OSG also include training, education, and outreach.

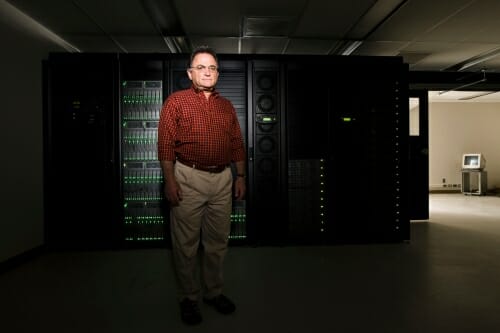

Miron Livny, a UW–Madison computer science professor, is pictured near an enclosed bank of distributed computing equipment in the Computer Sciences and Statistics building.

“Our long-standing partnership with the high energy physics community has been a cornerstone of our quest to advance state-of-the-art, high-throughput computing technologies,” said Miron Livny, John P. Morgridge Professor of Computer Science and CHTC director. “The Institute for Research and Innovation in Software for High-Energy Physics project will sustain this productive relationship that has been transforming the role computing plays in an ever-growing number of disciplines.”

The HL-LHC will build on the work that found the Higgs boson by allowing scientists to study it and other rare phenomena with greater detail. Luminosity reflects the performance of an accelerator – for example, CERN says that the LHC now produces three million Higgs bosons per year through high-energy particle collisions, but the HL-LHC will generate 15 million.

A number of institutions around the U.S. are part of IRIS-HEP, which is headed by Princeton University, including the Massachusetts Institute of Technology, Stanford University and the University of California – Berkeley.

Tags: computer science, computers, grants, software